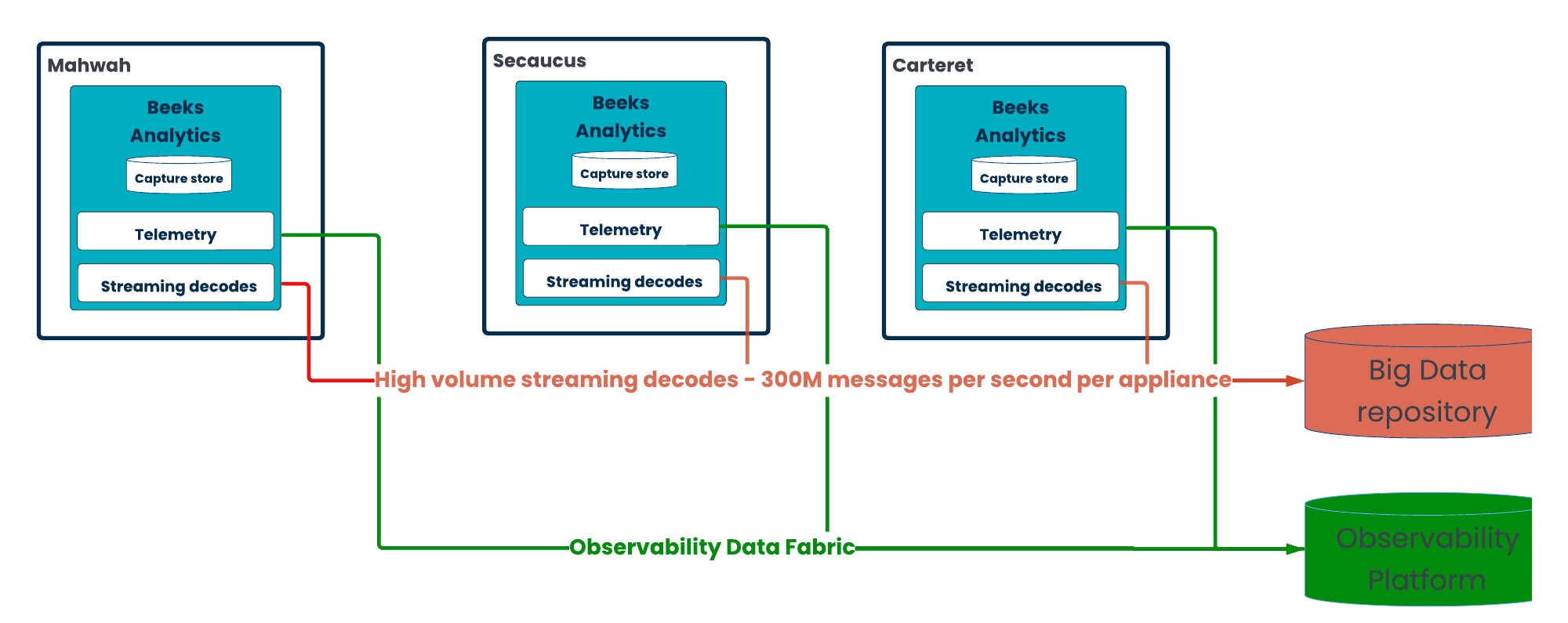

The New Jersey Triangle cluster of datacentres in Secaucus, Mahwah, and Carteret are an interesting example of how the architecture of Beeks Analytics supports firms central AI initiatives. These datacentres house multiple exchange matching engines and provide critical infrastructure for high-frequency trading (HFT) firms.

The U.S. stock markets produce a vast amount of market data primarily due to their size, liquidity, and competitive structure. Regulations such as Regulation NMS (National Market System) encourage competition among multiple exchanges, each generating and disseminating its own price quotations and trades. Furthermore, the depth and maturity of the derivatives markets, combined with the global significance of the U.S. economy, attract high participation volumes and rapid quote updates—ultimately contributing to an enormous amount of market data traffic.

As of 2024, NYSE/ICE and Nasdaq collectively control over 90% of U.S. equity trading volume, with remaining activity spread across 14 other SEC-regulated exchanges like CBOE and IEX.

Part of the reason that there’s so much trading on these exchanges is that, since the Unlisted Trading Privileges (UTP) Act in 1994, stocks have been allowed to trade on multiple exchanges. That meant the primary listing exchange was no longer the only exchange on which a stock could trade.

What benefit is there to the investor of having a choice of exchanges to trade on? Different exchanges might offer different fee structures, or slightly different algorithms for matching buyers and sellers of a particular stock. One of these might suit your trading strategy more than others. The US market structure tries to balance the needs of the institutional investor to optimise execution costs through ‘exchange selection’ strategies and fee competition between exchanges, whilst benefiting retail traders by mandating order routing to the particular venue which is offering the best prices at that time.

Although the US equity trading market is diverse in terms of the number of venues on which stocks can be traded, it is not that diverse in terms of where these markets are hosted. NYSE/ICE host the majority of these markets in their Mahwah datacentre, with Nasdaq hosting the majority of their markets in Carteret. The Equinix Secaucus site hosts many other exchanges and trading in different assets.

This means that collecting and monitoring the huge amount of data generated at these sites can be a challenge for a firm looking to collect that data and train models on it.

Scalable Capture

First of all, Beeks Analytics has the scalable capture to be able to store this information efficiently. Our latest generation of appliances can capture data at 200Gbps and, thanks to our FPGA compression technology, can store up to 2 petabytes of packet captures on the appliance.

If two petabytes aren’t sufficient for you, then our software allows you to offload packet captures to object-based cloud storage, whether for archiving, batch-based analysis, or regulatory purposes. Our data archiving software splits packet captures by market and provides cloud sync, saving you processing and development time. These cloud-based stores can act as the seed beds for future AI analysis work, if you are still developing your approaches. By storing every packet - sensibly categorised by market - firms gain unparalleled historical visibility enabling enhanced AI model training.

Flexible Decoders

However, once your central AI initiative starts to process data you’ve stored in the cloud or on your packet capture appliances, you’ll need to make sense of the packet data. Large Language Models show promise in analysing PCAPs one-by-one, but to use PCAPs for AI you need to systematically extract features from them rather than feeding them into a large model.

The Beeks Analytics VMX-Capture component doesn’t just provide the software and qualified appliance hardware for writing the captures. It also provides the feature extraction which AI models demand.

Our VMX-Capture network decodes are much more efficient at bulk PCAP processing than free tools like Wireshark, tshark, pyshark and their associated tools. And with 200+ financial markets and enterprise decoders, you don’t need to waste valuable development resources building and maintaining these yourself.

These decoders can both output statistical summaries of the packet capture contents and provide normalised message output of some or all of the packet streams. These normalised outputs will be easier to work with for many AI use cases than the raw packet captures. The flexible nature of the VMX-Capture configuration allows you to extract the fields you need from the packet captures to simplify your AI workload.

Streaming Use Cases

However, there are other use cases where you need streaming access to wire data. Perhaps you need the data as close to real-time as possible. Or perhaps you need systematic collection of session information about the conversations that are taking place over your network.

We can provide statistics and telemetry over Kafka or as an Open Telemetry Publisher.

Streaming is also a good technique where you don’t want the full packet capture – maybe you only want to look at certain fields from the messages.

Our high performance decoding layer can capture, decode and egress up to 300 million messages per second per appliance– giving you the visibility you need in your data lakes for some of the most demanding financial data.

For even higher volume requirements, we can stream using Apache Arrow or transfer the data using the Parquet data format, both of which allow you to reach even higher message rates.

Case Study Conclusions

In a market where the volume and velocity of financial data continue to rise, firms need a robust solution to capture, decode, and analyse this information efficiently. Beeks Analytics provides a comprehensive suite of tools that not only handle the scale of market data but also offer the flexibility needed for AI-driven insights. Whether through high-speed packet capture, advanced decoding, or real-time streaming, our solutions empower firms to extract value from their centralised data with minimal overhead. By leveraging scalable storage, efficient processing, and seamless integration with AI workflows, Beeks Analytics helps firms stay ahead in a competitive landscape where data-driven decision-making is critical.