Beeks supports many output formats for its CDF. Our recommended output is to use Kafka transport with JSON message encoding. The benefit of this approach is that you can use configuration/DevOps tooling to configure it, which is accessible to both developer and non-developer users. We describe the other supported output mechanisms here.

Kafka introduction

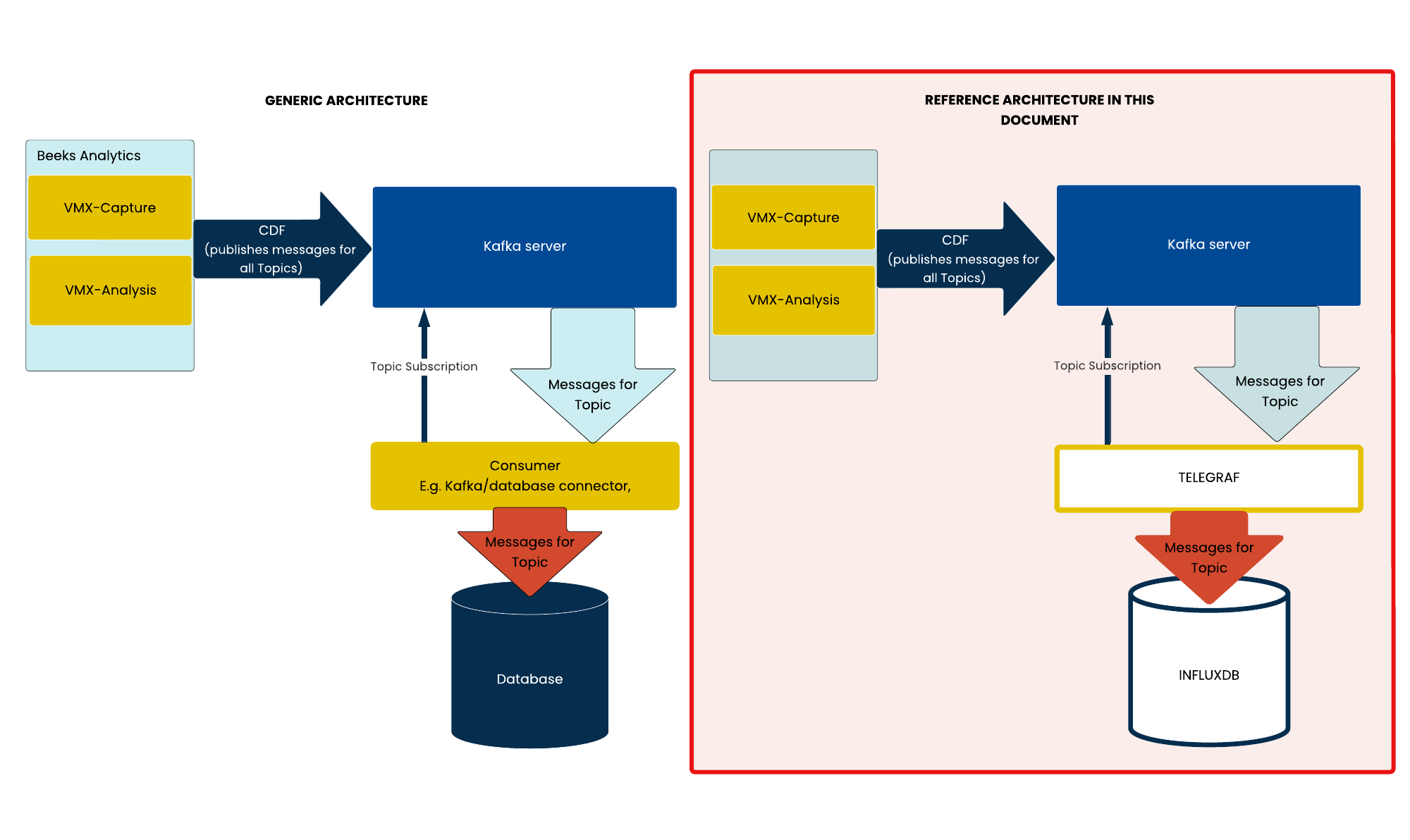

Kafka is a subscription system in which Producers publish messages in topics, to which Consumers then subscribe and listen via a Consumer API. In between the Producers and Consumers are storage facilities called Brokers. Kafka enforces guaranteed messaging by requiring the Consumer to acknowledge every message. For a good introduction to Kafka, see here.

Topics

The topics published by the CDF are detailed at Core Data Feed output.

Data format

The CDF outputs to Kafka in JSON.

Producer

In Beeks Analytics, the Producer is the CDF, which takes the following data streams:

CDF-M

Data stream from VMX-Capture.CDF-T

Data stream from VMX-Capture.CDF-I

Data stream, either from VMX-Analysis or in some circumstances (usually higher volume configurations) from VMX-Capture.

Consumer

Ready-to-use Kafka connectors are widely available and you can build your own using Kafka’s Consumer API. The examples in this document use Telegraf as a Consumer to write messages to an InfluxDB database.

Telegraf is an agent for collecting, processing, and aggregating data. Telegraf has a Kafka Input Plugin that enables it to read data from Kafka.

InfluxDB is a time-series database that can store the data collected by the Consumer.